Voice assistants are becoming more and more popular by the day.

It started in our phones, but now they’ve made it into the speakers of our homes and cars, ready to answer our questions and follow our commands.

For their users, voice assistants provide a convenient hands-free way of performing certain action like playing a song, checking the traffic reports, setting reminders or turning lights on and off.

For companies this convenience also provides a novel way for generating engagement, sometimes more so than webs or phone apps themselves.

In Alexa, the most popular voice assistant today, voice apps are called “skills” and can be installed in a few clicks (or voice commands) just how apps can be installed on phones through app stores.

Creating a great skill has a learning curve as any task, but if you haven’t yet created one I bet the curve is less steep than you might think. To demonstrate that I’m going to create a personal skill just for myself that allows me to check the waves on any beach of my region to decide if it’s a good time get my surfboard and hit the waves or not so much.

If you don’t own a smart speaker with Alexa don’t worry, you don’t need one to follow this tutorial. During development all can be tested on the very browser using your laptop’s microphone and once you’ve finished with the skill you can install it in your phone if you created something cool and want to have it on your pocket.

Motivation

Of the three main voice assistants Alexa is by a fair margin the one that is supported by more devices. That doesn’t really come as a surprise, Amazon has made the entry barrier to support Alexa on IoT devices very low and most manufacturers have chosen to support it.

On top of that, and although this is personal and perhaps depends on the language you interact with voice assistants, it’s also the one with the more natural voice. As picky as that may sound, it made me enjoy talking to Alexa in a matter of days more than I ever enjoyed using Google Assistant in several years that I had it in my phone.

I’ve since then created a few skills to automate my home and daily routines and now I wanted to create one to automate the fun parts.

Prerequisites

To follow this tutorial you will need an account on couple amazon services.

First, you need to login or sign up on https://developers.amazon.com but worry not, if you have a regular amazon account you can log in with that account here already. This one is mandatory.

Second, you will need to open an account in https://aws.amazon.com/ in case you don’t have one. This one is optional as you can host your skill in any platform but for this quick demo it’s the easiest way to get you up and running quickly. The reason is that Alexa skills need a backend and we’re going to use AWS Lambda for it, both because it’s very convenient as both are Amazon products that integrate easily and it will be free because you get one million free requests per month.

Accounts in https://aws.amazon.com are different from accounts in https://developers.amazon.com. AWS will require you to enter payment details like a credit card, but as mentioned above you’d have to send over 25 Alexa commands every minute of the day non-stop for an entire month to get charged.

What are we building?

I live in Galicia, a region in NW Spain that has around 1500km (~930 miles) of coast, many of which belong to beaches facing the Atlantic ocean with very good conditions for surf.

I wanted to create custom skill that allowed me to ask Alexa about the sea forecast (wave height and direction mostly) on a particular beach on a particular date (or right now!), so I could decide wether or not it’s worth getting the board and heading to the beach or help me decide to what beach to should go.

That simple skill will read the sea forecast from an open API that the local meteorological institute provides, which has references for over 870 beaches in the region.

I won’t get into how I crunch the JSON data that this API returns as it’s not really interesting but something any javascript developer could do with dome amount of patience inversely proportional of how good the API docs are.

First step: Creating the skill

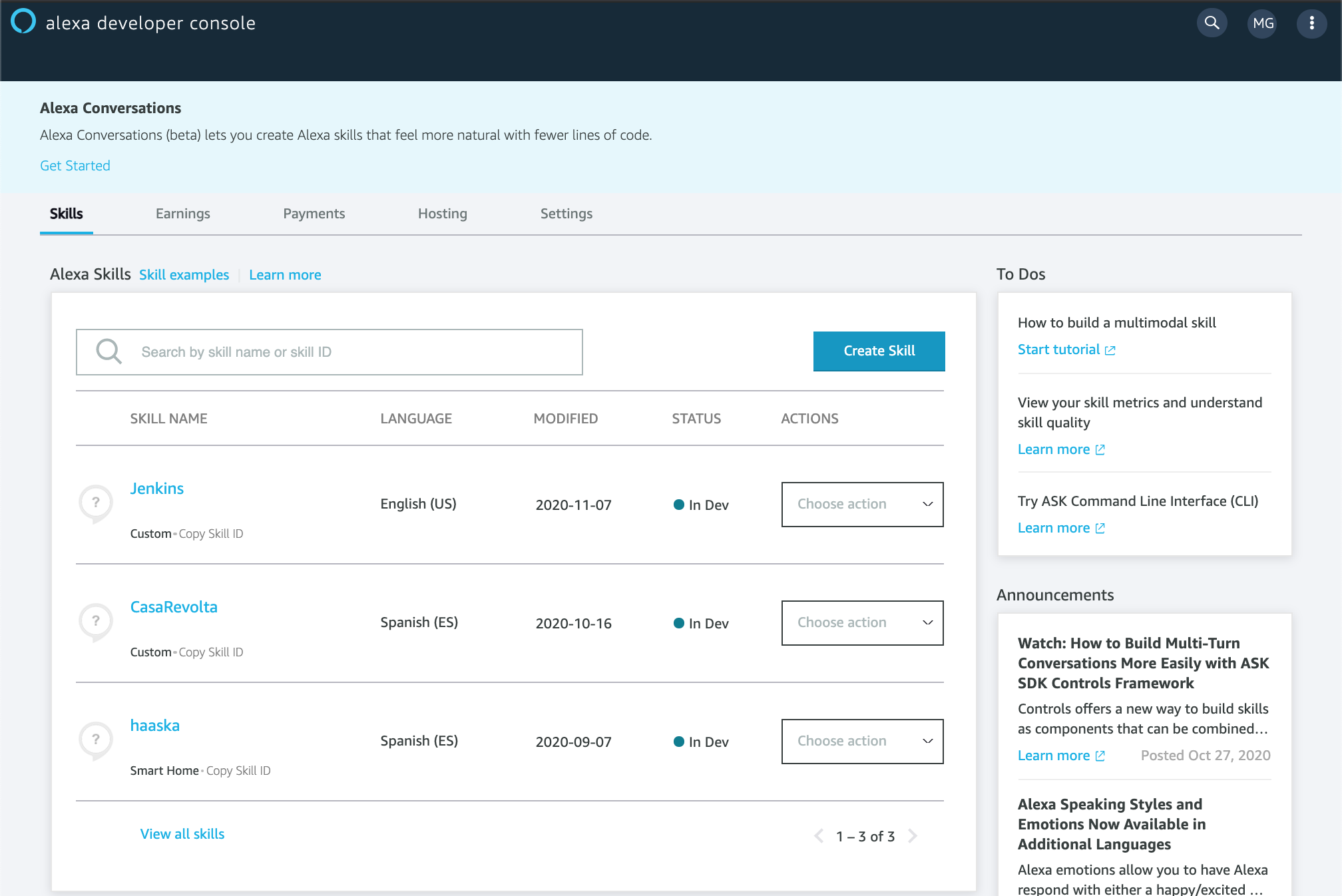

Once you’ve logged into https://developers.amazon.com and clicked on the Amazon Alexa section of the dashboard, you will be in portal for Alexa developers. From there you can open the Alexa console what will take you to your list of skills.

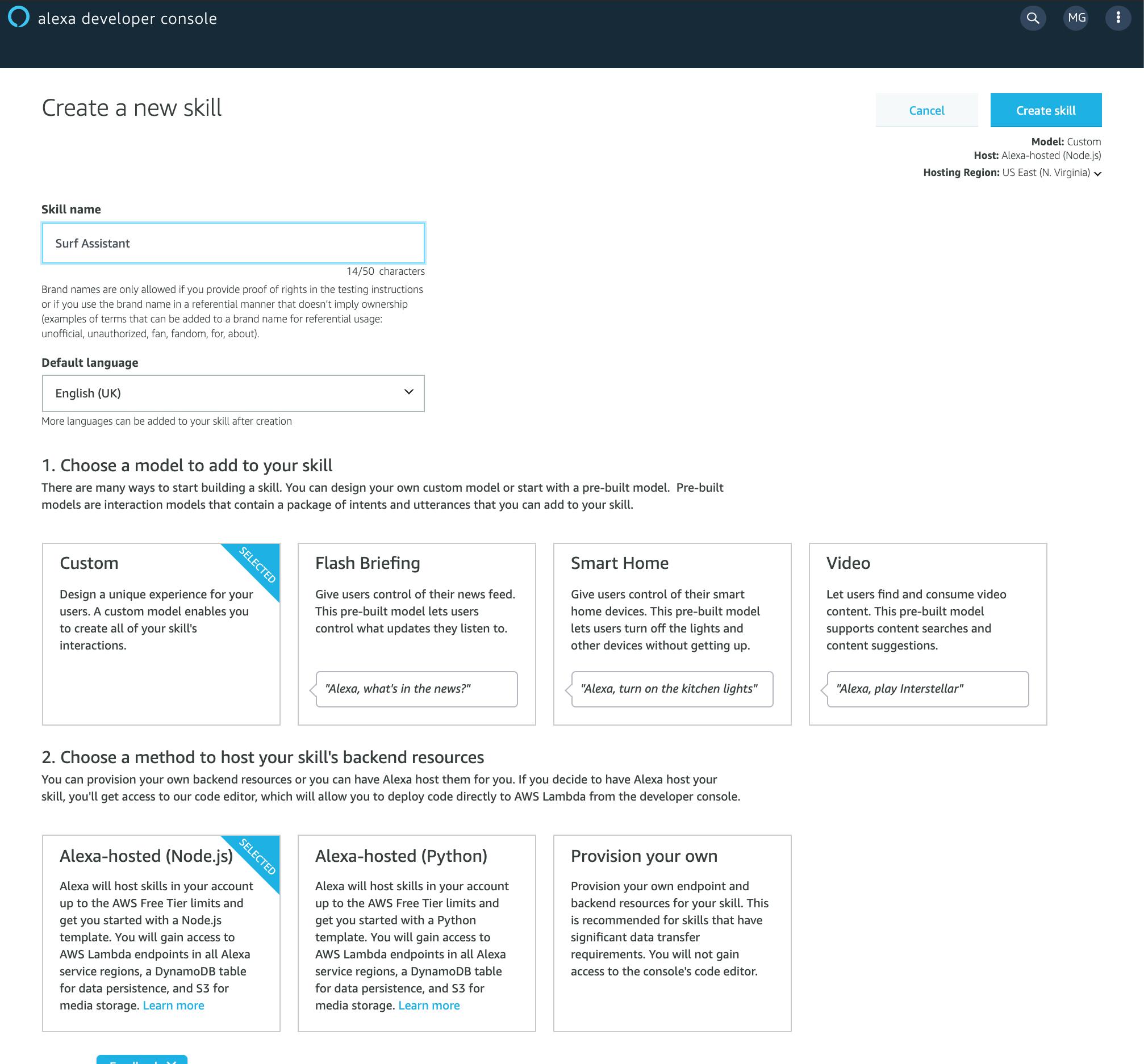

You click on the “Create Skill” button and choose a two-word name for the skill. That name will be invocation of your skill, that is the key word you need to use so Alexa knows what skill you’re invoking. More on that later.

Let’s name our skill Surf Assistant. In there we’re also going to select the language in which we’re going to interact with the skill (English - UK), the model for your skill (Custom), the host for your skill’s backend, (Alexa-hosted - Node.js) and hit Create skill.

Alexa-hosted is just a friendlier way of saying AWS Lambda.

It’s important to note that in here you can also choose the region. It’s important to note that depending on the language you choose, some may not be available in all regions. For instance for european Spanish you should choose EU (Ireland).

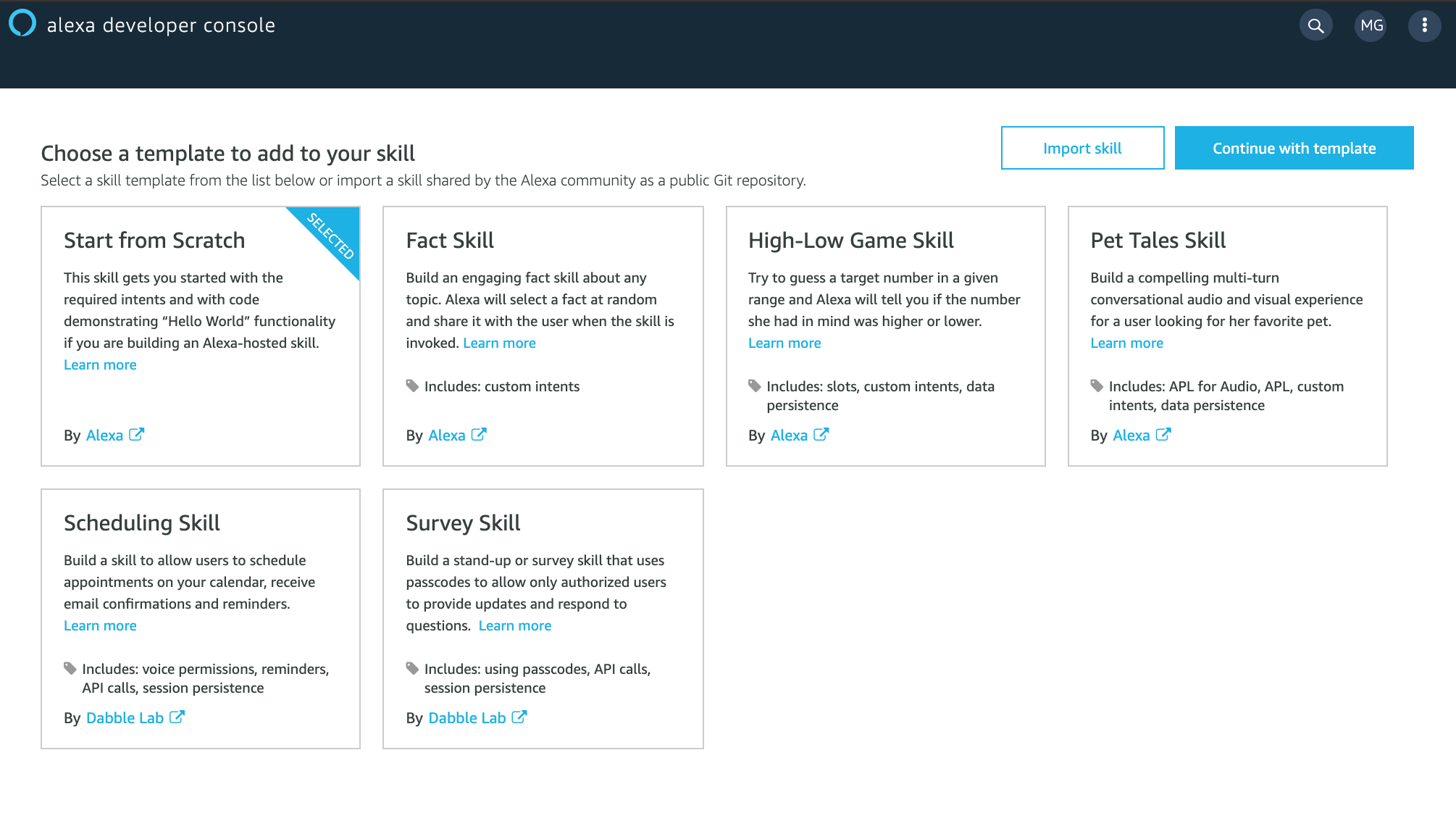

In the next step you’re going to select Start from scratch

You have your skill created! Now we have to create an Interaction model.

Second step: Create your interaction model.

In essence every Alexa skill follows a very simple pattern.

You define a list of commands that your app will know how to handle, called Intents and for each one of them you define many Utterances, that is all the phrases you can imagine users will say to convey that command. That is necessary because human languages are flawed, there are many ways of saying the same thing and users may convey the same order using completely different sentences.

Let’s see it with an example.

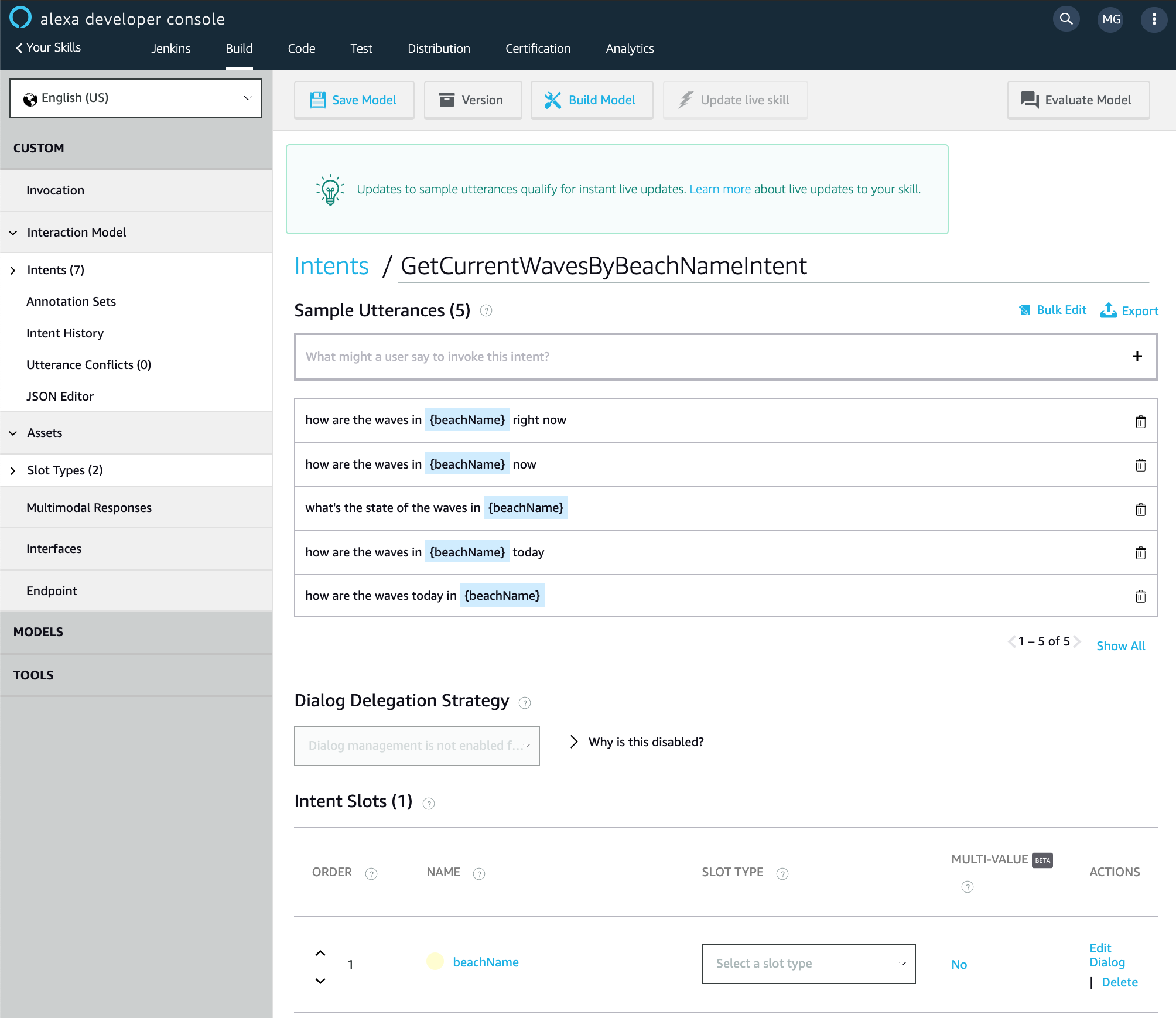

We want to create an intent for when the user wants to check the sea status right now on a specific beach. Following conventions it should be camel-cased and end in Intent so we’re going to name it GetCurrentWavesByBeachNameIntent.

Inside the intent is where you add examples of what sentences users could say to trigger this intent. Those are called Utterances. Later on, Alexa’s algorithm will process the utterances to produce an AI model that really understands the sentences, so it will recognize what the user means most of the time. Even if the user phrases things a little bit different from how we anticipated, uses synonyms or changes the order of a few words most of the time Alexa will be able to understand the order but the more utterances you provide the smarter the algorithm will be.

There’s another detail we haven’t dealt with yet. Since this intent needs the name of a beach we want the forecast of, we have to define a Slot, which we delimitate with braces.

A Slot that is a part of the sentence that is variable and has to be extracted and made available to our skill’s backend.

Slots need to have associated a type. Alexa defines many built in types for the most common usages like numbers, dates, ordinals and phone numbers… but also a lot more that are very specialized: actors, airports, countries, fictional characters… You can check the full list here.

For now our {{beachName}} slot has no type associated, and it needs one.

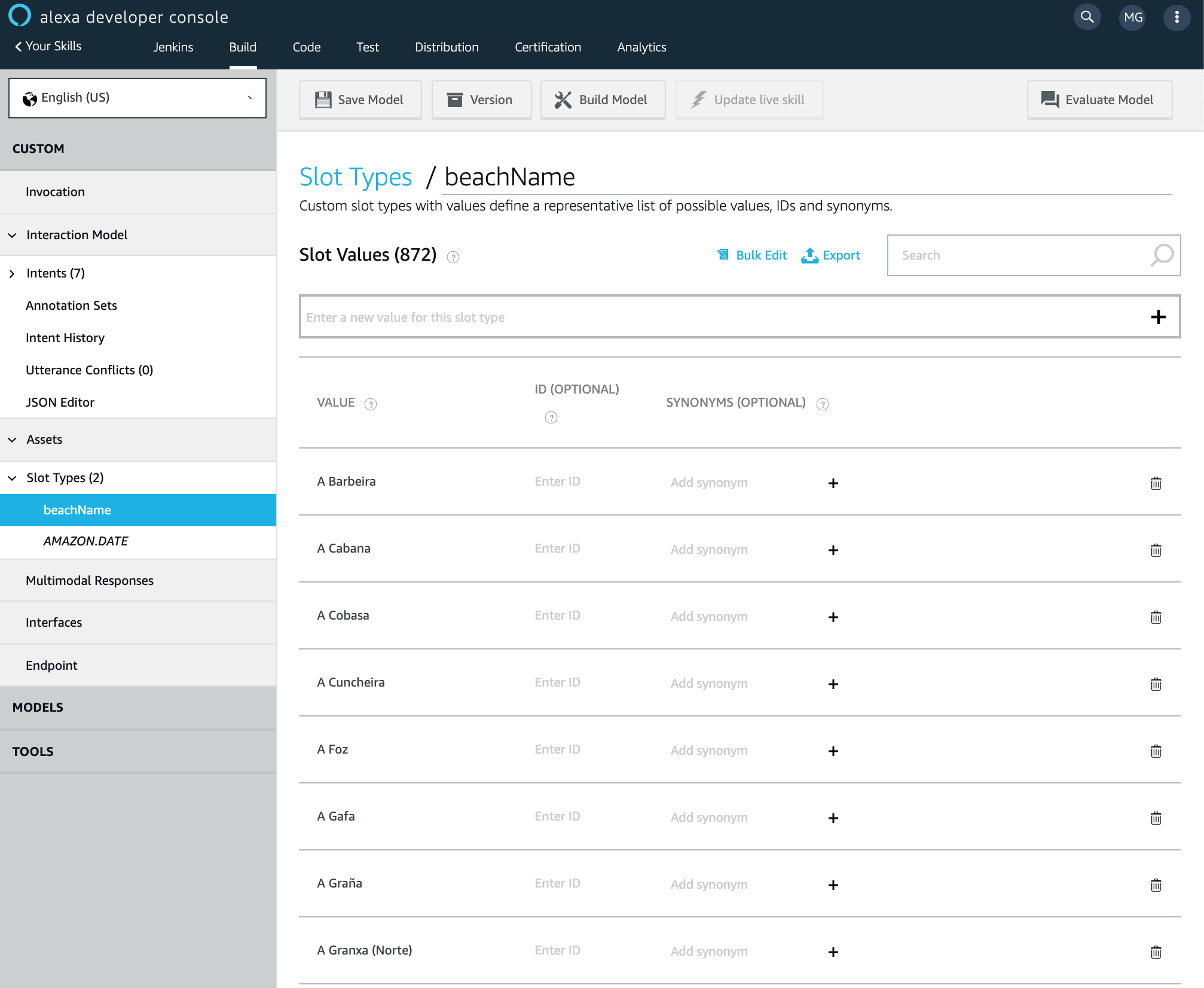

Since Alexa doesn’t has a built in slot type for all the names of the 873 galician beaches, we can always create our own slot type and populate it with all the possible options:

If you only have a few values you can populate it using the UI, but if you have hundreds like in this case it’s far more convenient to upload a CSV file, which I crafted using the data from the forecast API and uploaded using the Bulk Edit option. Once the slot type is created you can assign to the slots you created on the previous step. Slots teach Alexa how to recognize words like names or places are not part of any dictionary and would otherwise have a hard time understanding.

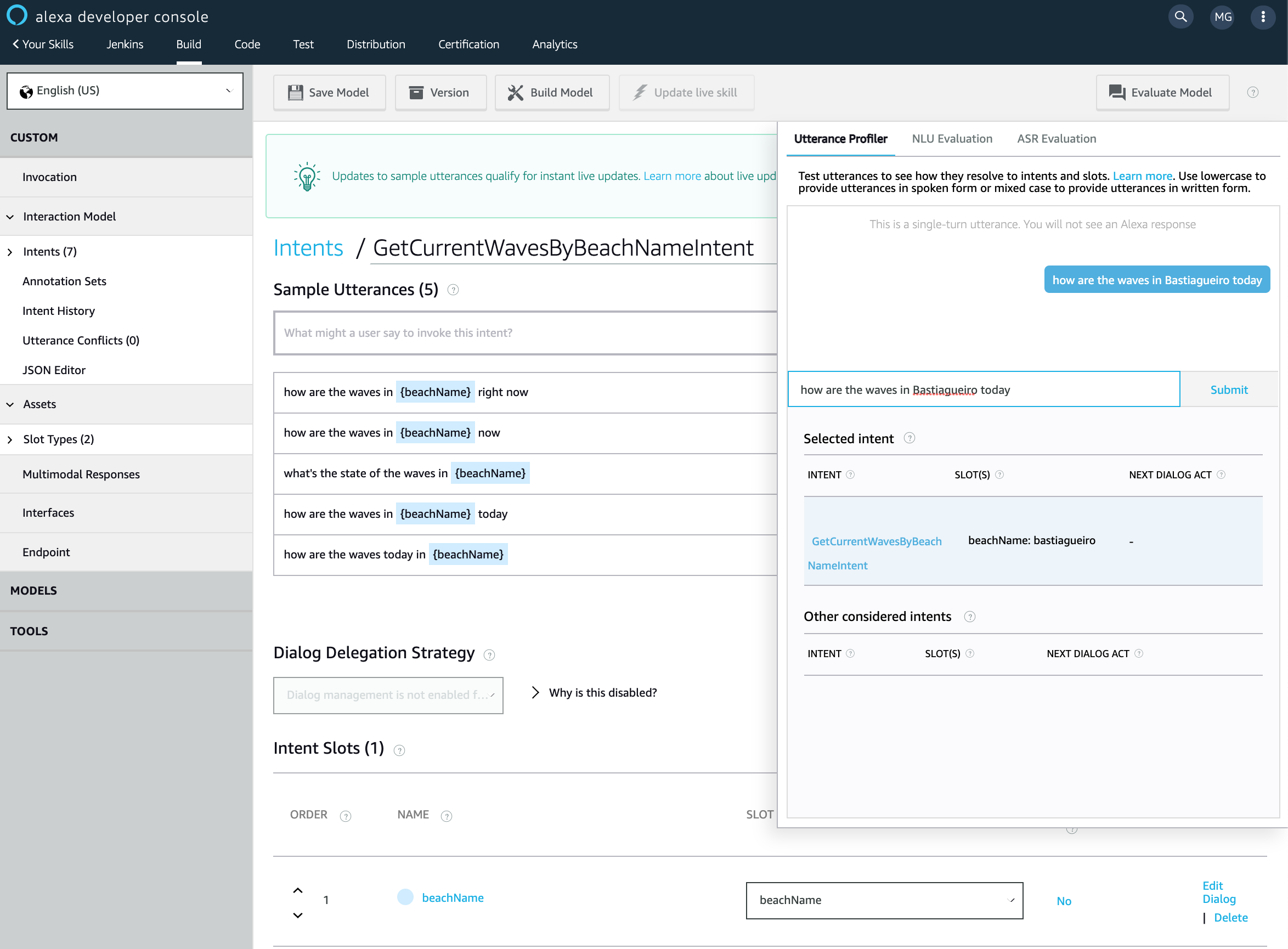

That’s it, now we can click Save and Build Model on the top menu and once it has finished click on the Evaluate Model to give it a go.

On the Utterance profiler you can type a sentence and check what intent Alexa recognized the value of the slots and other possible intents it considered.

Now that Alexa understands intents, it’s the time to write actual code to handle what happens when the user speaks that.

Third step: Handling intents.

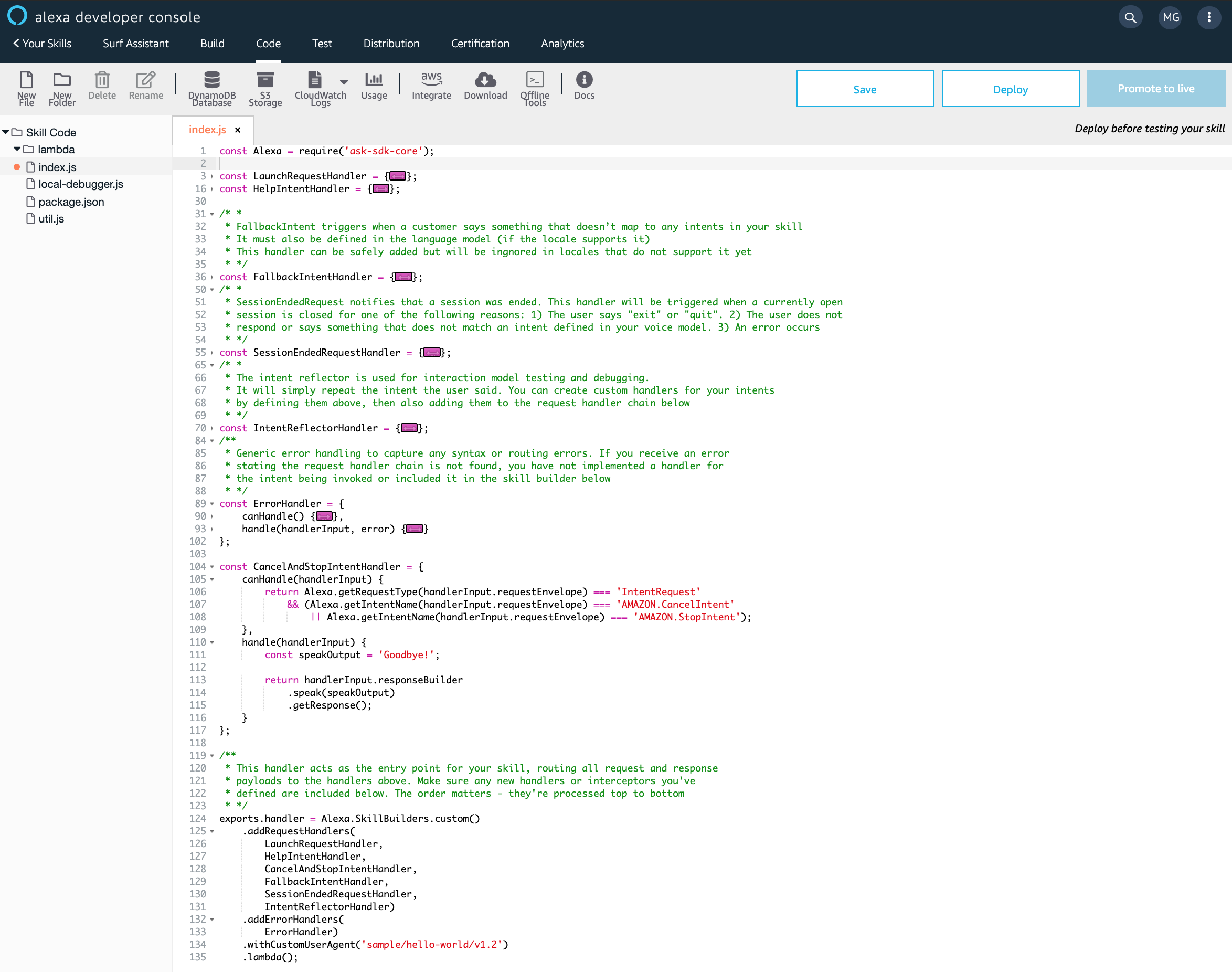

To write the code we’re not even going to spin up our preferred code editor yet, we are going to click on the Code tab on the top menu which will take us to a online code editor with an already functional template code.

The code is easy to grasp. There are a bunch of objects all ending on Handler, one for each

of the default intents every app must implement, like CancelAndStopIntentHandler or ErrorHandler.

All the handlers have two function properties: canHandle and handle.

The names are pretty self explanatory but canHandle is the one in charge of deciding wether a call

be handled by that handler and handle does the actual work.

You probably don’t need to modify the basic intent handlers as those are the ones present in most apps for basic features, like exiting the skill at any time saying “Alexa stop”.

Instead you just have to add a new handler for the GetCurrentWavesByBeachNameIntent

we added to our model and then pass it to Alexa.SkillBuilders.custom().addRequestHandlers.

Let’s see the final code.

const Alexa = require('ask-sdk-core');

const fetch = require('node-fetch');

const MeteoSixApiKey = 'SECRET'; // Secret API key for the public weather forecast service.

const DOMAIN = 'http://servizos.meteogalicia.es/apiv3/';

function getWaves(beachName) {

return fetch(`${DOMAIN}/findPlaces?location=${beachName}&types=beach&API_KEY=${MeteoSixApiKey}`)

.then(response => response.json())

.then(json => json.features[0].properties.id)

.then(id => fetch(`${DOMAIN}/getNumericForecastInfo?locationIds=${id}&variables=significative_wave_height,mean_wave_direction&API_KEY=${MeteoSixApiKey}`))

.then(response => response.json());

}

function generateWaveStatusSentence(prediction) {

// Boring code to extract meaningful data from a forecast.

return `${waveHeight} meters from the ${direction}`;

}

function getPredition(data, date) {

// Boring JSON crunching to extract the prediction for the given moment.

}

const GetCurrentWavesByBeachNameIntentHandler = {

canHandle(handlerInput) {

return Alexa.getRequestType(handlerInput.requestEnvelope) === 'IntentRequest'

&& Alexa.getIntentName(handlerInput.requestEnvelope) === 'GetCurrentWavesByBeachNameIntent';

},

handle(handlerInput) {

let { slots } = handlerInput.requestEnvelope.request.intent;

let beachName = slots.beachName.value;

return getWaves(beachName)

.then(data => generateWaveStatusSentence(getPredition(data)))

.then(str => `Waves in ${beachName} are ${str} right now`)

.then(phase => handlerInput.responseBuilder.speak(phase).getResponse());

}

};

// ... keep all the default handlers unchanged unless you have a good reason not to.

exports.handler = Alexa.SkillBuilders.custom()

.addRequestHandlers(

LaunchRequestHandler,

GetCurrentWavesByBeachNameIntentHandler, // the new handler

HelpIntentHandler,

CancelAndStopIntentHandler,

FallbackIntentHandler,

SessionEndedRequestHandler,

IntentReflectorHandler)

.addErrorHandlers(ErrorHandler)

.withCustomUserAgent('sample/hello-world/v1.2')

.lambda();

Let’s break that down.

Our handler’s canHandle function returns true when the intent is GetCurrentWavesByBeachNameIntent and

the handle function extracts the value of the slot from handlerInput.requestEnvelope.request.intent.slots, which

is how the Alexa API wraps things.

From that point the rest of the function is just regular Javascript code. I call some API, I extract the information I want from the returned JSON and build the response sentence.

Finally, and this is the last bit of the demo that is Alexa-specific, you pass that sentence you want Alexa to

speak to handlerInput.responseBuilder.speak(phrase).getResponse().

Don’t forget to add the handler you’ve just defined to .addRequestHandlers() and the end of the file and you’re done.

If you need to install third party libraries like I did with node-fetch you add them to your list of dependencies

on the package.json like on any Node.js project and AWS will take care of installing them when you deploy.

Final step: Deploy, test and install.

The deploy part is very simple. Click the Deploy button on the top-right corner of the screen above the code editor.

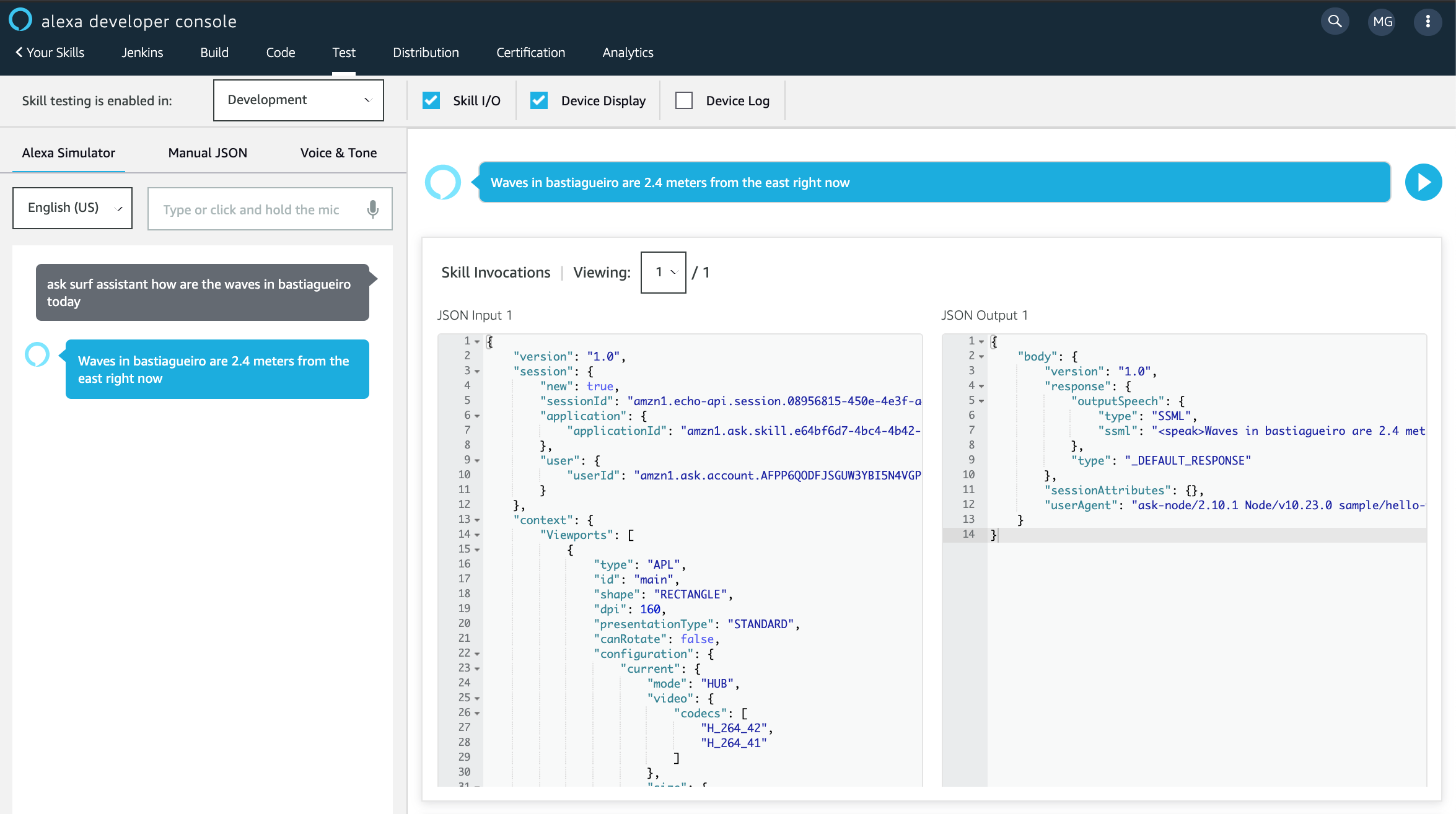

To test the app we go to the Test tab on the top menu.

You can either type or use the microphone and Alexa will respond. You also have nice panels to see the parsed input sent to your lambda function and the response returned by it which can be handy sometimes.

Note that unlike the Evaluate Model interface you used while creating your voice model in this test interface you have to type (or speak) the invocation of your skill.

You don’t just say How are the waves in Bastiagueiro today, but Ask surf assistant how are the waves in Bastiagueiro today.

This is necessary because just like a physical Alexa device, the test UI needs to know the what skill you’re invoking so it can use the right voice model. There’s an experimental feature to remove this requirement and make Alexa find out if any if your installed skills can handle your command without you explicitly telling Alexa what skill to use but for now is not available in all languages and regions.

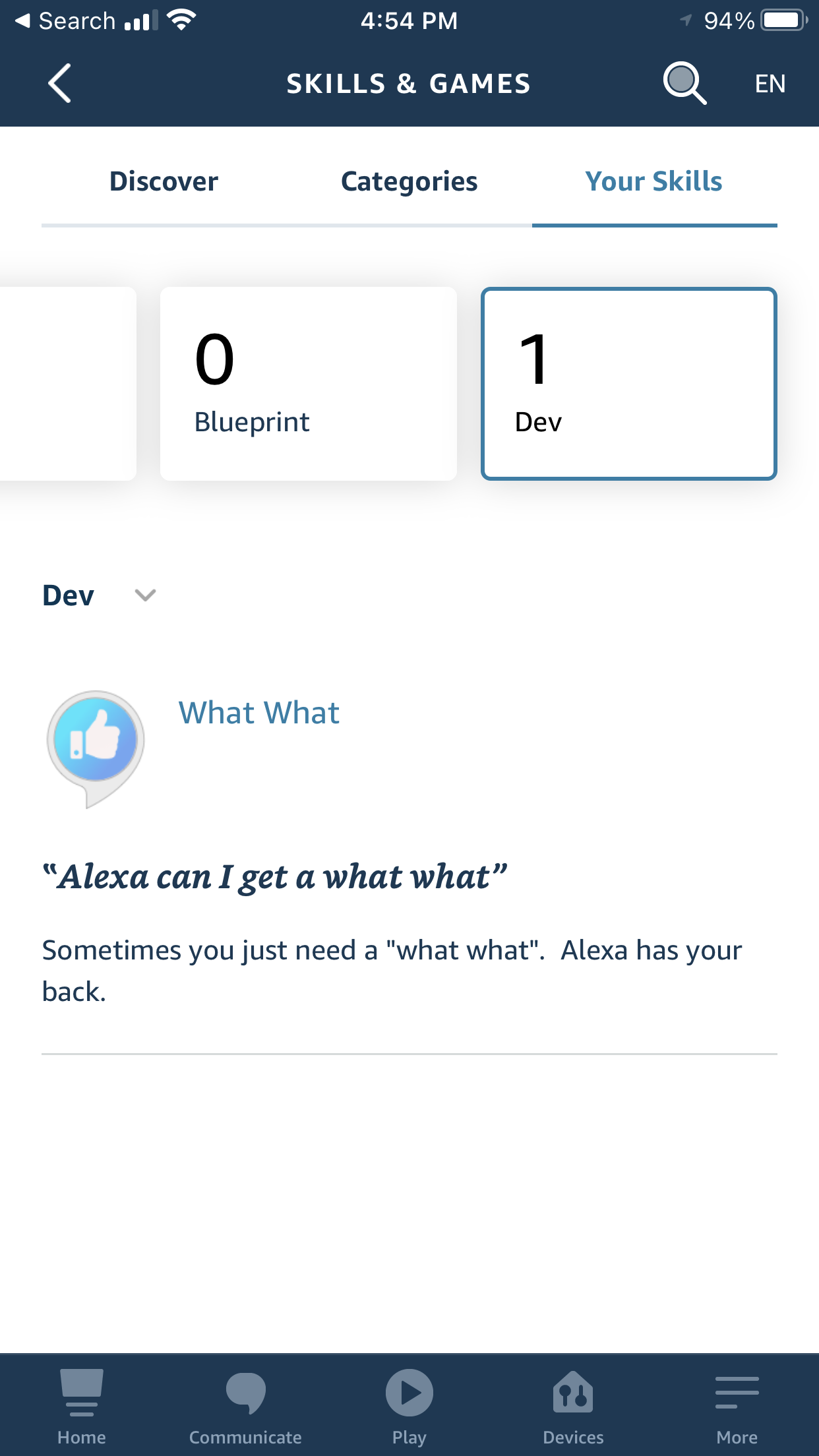

The last step is to install your skill into your Alexa account which should make it available on all your Alexa devices at once.

You can do it on https://alexa.amazon.com, going to to Skills menu, then to My Skills and then to Developer Skills where you will see your custom skills ready to be installed.

Do it and start using it!

Wrapping up

That’s it, in a matter of hours we’ve create an app that lives on smart speakers and uses machine learning to understand voice commands and tell you the surf conditions on a beach of your choice.

How cool is that? Just a few years ago building something similar in such little time looked that taken out of bad “hackers” movie.

The process is so simple that recently I found myself creating custom skills to improve my smart home, allowing me to ask how much time’s left until the laundry is done, what’s the current price of the electricity and how much I’m consuming right now.

I think that voice assistants will open a new market for companies and brands to add an alternate path for users to consume their services while driving or washing the dishes.